pip install cgmm

We’ve just released v0.4 of cgmm, our open-source library for Conditional Gaussian Mixture Modelling.

If you’re new to cgmm: it’s a flexible, data-driven way to model conditional distributions beyond Gaussian or linear assumptions. It can:

- Model non-Gaussian distributions

- Capture non-linear dependencies

- Work in a fully data-driven way

This makes it useful in research and applied settings where complexity is the norm rather than the exception.

What’s new in this release:

- Mixture of Experts (MoE): Softmax-gated experts with linear mean functions (Jordan & Jacobs, Hierarchical Mixtures of Experts and the EM Algorithm, Neural Computation, 1994)

- Direct conditional likelihood optimization: EM algorithm implementation from Jaakkola & Haussler (Expectation-Maximization Algorithms for Conditional Likelihoods, ICML 2000)

New examples and applications include:

- VIX volatility Monte Carlo simulation (non-linear, non-Gaussian SDEs)

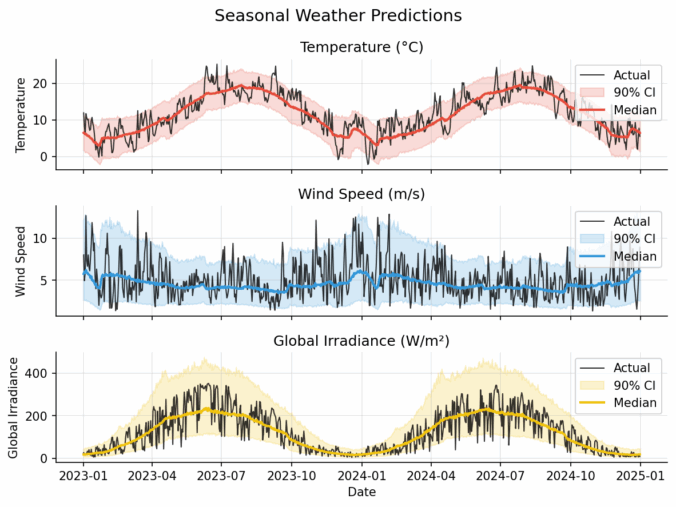

- Hourly and seasonal forecasts of temperature, windspeed, and light intensity

- The IRIS dataset and other sklearn benchmarks

- Generative modelling of MNIST handwritten digits

Explore more:

- Docs: https://cgmm.readthedocs.io

- PyPI: https://pypi.org/project/cgmm/

- GitHub: https://github.com/sitmo/cgmm

If you find this useful, please share it, give it a ⭐ on GitHub, or help spread the word. Your feedback and contributions make a big difference.

Not investment advice, the library continues to evolve, and we welcome ideas, feature requests, and real-world examples!